AI Refund Fraud in Food Delivery | What Apps Must Know

AI refund fraud in food delivery is rising fast. Users now edit normal order photos to create synthetic evidence that looks real enough to trigger refunds. The financial impact spreads across platforms and restaurants, while support teams struggle with high volume and limited time to review media. As fake food damage photos and AI-edited media increase, the food delivery industry now needs real-time detection built to identify synthetic refund scams before approval.

The rise in AI manipulation in delivery apps is shaping a new threat pattern.

False refund claims are easier to make, harder to spot, and often slip through auto-refund systems. Reports reveal that fraudulent returns cost retailers more than $103 billion in 2024.

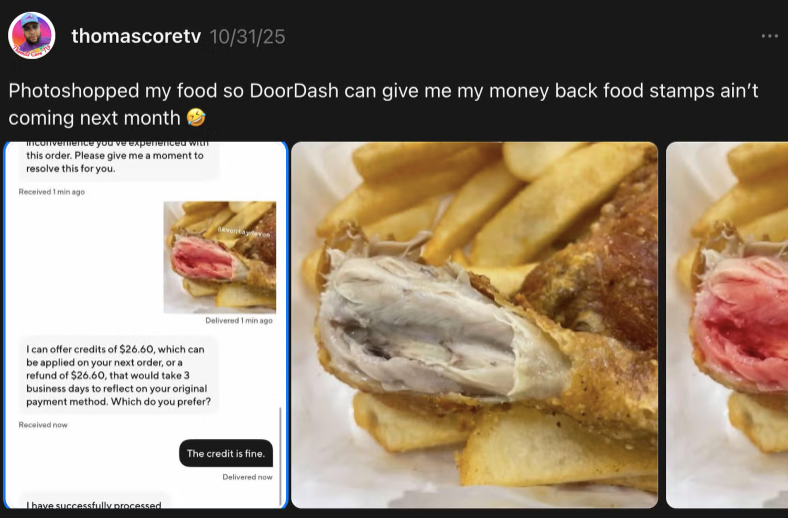

What's concerning is that TikTok and YouTube now host step-by-step refund hacks where creators record themselves editing meals, swapping textures, and building fake complaint photos. The playbook is public.

Anyone who watches a short clip can learn how to run the same trick. Image editors can turn a normal meal into spoiled food with little effort. Raw centers, cracked shells, mold patterns, and fake leaks can be generated in seconds.

As a result, fake food damage photos and AI-edited food images arrive at rates that make manual review unreliable.

One survey found that 45% of American shoppers broke refund rules in the past year.

This Reddit user summed up the issue well.

Support teams have to deal with the aftermath.

Most support teams weren’t built for forensic review. They’re short on time, tools, and staffing, which makes reviews inconsistent.

They can spot basic fakes, but newer edits hide their traces well. Audio fakes and video manipulation create new blind spots. While most claims are honest, the volume of synthetic claims is rising fast.

This article covers what delivery platforms should prepare for:

How AI damage generators work

How auto-refunds and synthetic evidence interact

Real cases across India and the U.S.

Future threats like deepfake delivery videos and AI voice complaints

Why real-time, multi-modal detection is the need of the hour

AI tools make anyone a “damage designer”

The rising popularity of AI editing tools like Gemini has reshaped refund abuse. Many users now take normal meal photos and alter them to look raw, burnt, spilled, or spoiled.

The work takes minutes. Colors, textures, and shadows are easy to adjust. These edited images then enter support queues as real complaints.

Most apps can add texture, create shadows, or swap surfaces with little effort. This creates fake food damage photos that look real enough to pass early checks.

The rise of synthetic evidence refund scams has created strain across delivery apps. The images are fast to make and hard to review. They affect food delivery trust and safety because most claims are taken up by support with no deeper checks.

These tools can build:

Raw meat textures

Fake burns

Staged leaks

Mold-like patterns

Damaged packaging

Auto-refund systems amplify the risk

Adding to this problem is the convenience of auto-refunds.

Auto-refunds help real users when something goes wrong. But they also create open doors for fraud. A fake image can trigger a refund before anyone looks at it.

Data from Incognia shows that about 48% of consumer-side fraud on delivery apps is tied to refund claims. This includes cases where the image, story, or evidence is shaped to match the claim.

Auto-refund paths allow these claims to move through without much review.

Recent cases show how common the issue has become. The mix of fast refunds and simple editing tools creates a steady stream of claims built on fake food damage photos.

Real cases from Zomato, Swiggy, and DoorDash

Refund abuse has reached global delivery brands as AI-edited food images spread across social apps. The cases differ by market but follow the same pattern: a normal dish turned into false evidence.

In India, a Zomato customer submitted a photo of a damaged cake to claim a ₹1,820 refund. The image (as seen below) carried edits that mimicked natural spoilage.

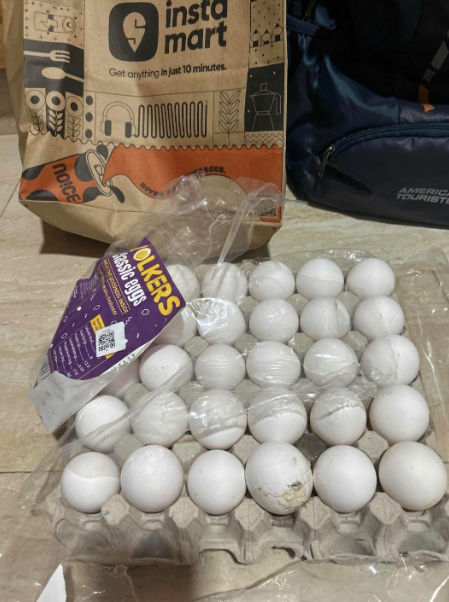

A more recent Swiggy Instamart case involved a user who generated cracked egg photos through a mobile AI tool. The claim passed because the photo looked real.

In the US, DoorDash faced cases where cooked meals were edited to appear raw. These AI-edited food images were used to request refunds that would have been approved without deeper review.

How this affects food delivery apps and restaurants

AI manipulation in delivery apps has created pressure on both sides of the marketplace. Platforms face growing refund abuse, while restaurants absorb losses tied to staged claims.

Impact on food delivery apps

Higher refund burn

Support teams slowed by synthetic evidence refund scams

Concern over deepfake refund scams and edited delivery proof

Strain on food delivery fraud prevention systems

Rising interest from regulators

Customer frustration when policies tighten

Impact on restaurants

Revenue loss from false refunds

Time spent disputing claims built on synthetic photos

Lower ratings tied to edited complaint images

Drops in order volume triggered by rating hits

Stress on staff who must defend real orders

Growing doubt in food delivery trust and safety systems

Why food delivery apps need real-time detection

The speed of refunds once helped platforms grow, but now the same speed makes synthetic evidence harder to stop. AI-edited food images and staged delivery complaints blend into normal review flows.

Manual flagging or after-the-fact audits do little once the money and rating hits are already recorded.

Real-time detection matters because fraud now happens during the request, not after. AI food fraud detection must run before the refund is granted.

Without it, the platform pays for claims built on fake food damage photos, and restaurants lose trust in food delivery fraud prevention frameworks. Every delayed fix increases refund burn, support cost, and tension with merchant partners.

What future fraud may look like

Refund abuse in delivery apps may grow far beyond edited food photos. Tools are improving to the point where AI-edited food images may look identical to real damage, even under detailed inspection.

In the near future, support teams may face full complaint packages where every piece of “proof” is synthetic.

Claims may not rely on user-created photos at all. AI manipulation in delivery apps could generate full delivery narratives with deepfake courier identities, fake timestamps, and GPS spoofing tied to order history.

Future tactics may include:

Synthetic video proof tied to staged tracking logs

AI text scripts tailored to the platform refund logic

Deepfake detection evasion through a metadata scrubbing tool

One thing is certain: fraud is moving toward automation, not isolated behavior. Detection has to match that shift before scale makes it unmanageable.

Where Contrails AI fits in the refund review pipeline

The gap between real food complaints and synthetic refund claims is closing.

Photos look authentic. Audio calls sound natural. Claim narratives are repeated across fraud circles and automation tools. Delivery support systems that rely on trust and speed are now facing abuse at scale.

Contrails AI helps platforms filter genuine complaints from artificial media at scale. It detects AI-edited food images, deepfake refund scams, synthetic voice support calls, and metadata inconsistencies across the claim flow. The detection runs in real time, so approvals are not processed before the evidence is reviewed.

As refund abuse grows, the question shifts from “can we detect it?” to “can we detect it at scale without slowing service?”

If your platform is reaching that point, book a demo and see how Contrails AI’s multimodal forensic detection works across live delivery scenarios.

Did you enjoy this post?